Auto Updating Binary In Go

Auto updating a binary in Go

Introduction

When your app is being used by real customers, the ideal situation is that updating your application does not require your customers to manually download a new binary. I’ll be showing a proof-of-concept in Go that fixes this. Text and code written by me but Claude AI helped me with section names and some typos.

Common Binary Update Challenges

There are some common problems related to updating a binary:

- Different operating systems have different implementations of the file system. For example, Windows does not allow you to replace a file that is currently being used by another process. However, Unix systems allow this because deleting the “file” only deletes a link to the actual thing.

- Ensuring updated binary authenticity before using it.

Demonstrating OS Differences

To demonstrate the first problem, I cross-compiled code that tries to replace itself on disk for both platforms.

// binary name: TestSelfModifying

package main

import (

"fmt"

"os"

)

func main() {

path := os.Args[0]

c, err := os.ReadFile(path)

if err != nil {

panic(err)

}

buName := fmt.Sprintf("%s.bu", path)

err = os.WriteFile(buName, c, 0600)

if err != nil {

panic(err)

}

err = os.WriteFile(path, []byte("random_contents"), 0600)

if err != nil {

panic(err)

}

}

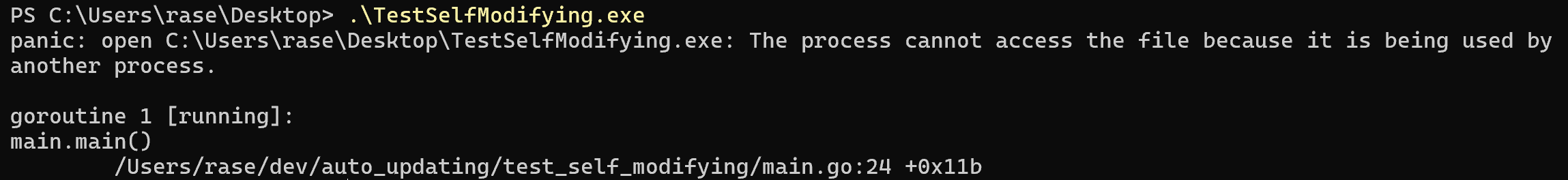

Running this on Windows yields:

Running the same binary on a Unix machine (Darwin in my case) yields:

Implementation: User-Triggered Binary Updates

Overview

Two binaries will be created:

- The binary that needs updating

- The binary that handles updating the existing binary

On top of these two binaries, I have set up a GitHub repository that acts as the new binary repository. This could be any remote server that contains the updated binary.

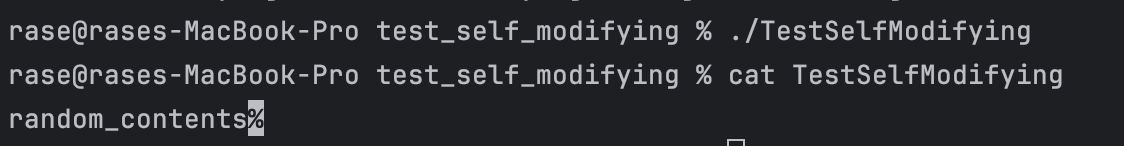

Publisher publishes a new release

Although this blog post focuses on the client side, I think it’s beneficial to show an example of how the publisher would generate an encrypted checksum when releasing a new binary. The algorithm and location of the signature file has to be known to the client side. Here’s a command that first generates a SHA-256 checksum of the file and takes the checksum string and encrypts it with a RSA private key.

Main binary

// binary name: AutoUpdateBinary

package main

import (

"log"

"os"

"os/exec"

"runtime"

"strconv"

)

func main() {

args := os.Args

if len(args) != 2 {

log.Fatalf("Usage: %s run/update\n", args[0])

}

binary := args[0]

mode := args[1]

selfPid := os.Getpid()

log.Printf("PID: %d\n", selfPid)

if mode == "run" {

log.Println("Simulating work")

} else if mode == "update" {

// Using exec.Command + Start instead of syscall.Exec because syscall.Exec inherits PID and the other does not since it spawns a subprocess.

var pathToUpdater string

if runtime.GOOS == "windows" {

pathToUpdater = "C:\\Users\\rase\\Desktop\\AutoUpdateUpdater.exe"

} else {

pathToUpdater = "/Users/rase/dev/auto_updating/auto_update_updater/AutoUpdateUpdater"

}

execCmd := exec.Command(pathToUpdater, strconv.Itoa(selfPid), binary)

if err := execCmd.Start(); err != nil {

log.Fatal(err)

}

log.Println("Updating binary...")

os.Exit(0)

} else {

log.Fatal("Unknown mode, expected run/update")

}

}

When the binary is called with the “update” argument, it will call the updater binary. Remember that Windows requires that no processes are accessing the updated resource to modify it. This means that the binary that needs updating can not be running while it’s being updated.

We can work around this restriction by creating a new process that will update the binary. In Go, we do this with the os/exec package. When calling the subprocess, we pass in:

- The current program’s pid so that the updater can make sure that the process has already died before continuing with the update

- Path to the binary that needs updating to know what to replace with the new binary

The reason for this is that at the time the child process would fetch this information by using the parent PID, the parent process could already be dead and the PID could be used by another process, or it wouldn’t exist at all. I suggest reading the main function from the next code snippet and after that go into the specific functions. I made the names self explanatory.

Updater binary

// binary name: AutoUpdateUpdater

package main

import (

"crypto"

"crypto/rsa"

"crypto/sha256"

"crypto/x509"

"encoding/pem"

"errors"

"fmt"

"io"

"log"

"os"

"os/exec"

"path"

"path/filepath"

"strconv"

"time"

)

// waitUntilPidIsDead waits 20 seconds for a pid to disappear to know the process has stopped running.

func waitUntilPidIsDead(pid int) (success bool) {

var c int

for {

time.Sleep(1 * time.Second)

if c >= 20 {

return false

}

c += 1

// Checking that a pid exists happens in a different way depending on the OS (windows/unix). I have implemented the function for both operating systems with go build flags.

// If you're interested in seeing how they work you can check the code in the repository.

// Darwin: https://github.com/52617365/example_binary_updater/blob/e7d1de720cb090274fa78b0af8645bac4ea2e9cd/pid_darwin.go

// Windows: https://github.com/52617365/example_binary_updater/blob/e7d1de720cb090274fa78b0af8645bac4ea2e9cd/pid_windows.go

pExists, err := pidExists(pid)

if err != nil {

log.Fatalf("check number %d check returned %v", c, err)

}

if !pExists {

return true

}

}

}

func main() {

if len(os.Args) != 3 {

log.Fatalf("Usage: %s <pid> <path to binary to update>", os.Args[0])

}

parentPidString := os.Args[1]

binaryToUpdate := os.Args[2]

parentPidInt, err := strconv.Atoi(parentPidString)

if err != nil {

log.Fatalf("Invalid pid: %v", err)

}

success := waitUntilPidIsDead(parentPidInt)

if !success {

log.Fatalf("We waited 20 seconds for the ppid %d to die but it did not. We will not kill the parent process because it could lead to unexpected consequences.", parentPidInt)

}

remoteBinaryPathDirectory := fetchNewBinaryFromRemote()

publishersEncryptedShasum, err := getBinarySignature(remoteBinaryPathDirectory)

if err != nil {

log.Fatalln("signature did not exist in fetched repository")

}

remoteBinaryPath := path.Join(remoteBinaryPathDirectory, "AutoUpdateBinary")

remoteBinaryShasum := generateLocalSha256FromBinary(remoteBinaryPath)

publishersPublicKey, err := getPublishersPublicKey()

if err != nil {

log.Fatalf("Error reading publishers public key: %v", err)

}

err = verifyPublishersSignature(publishersPublicKey, remoteBinaryShasum, publishersEncryptedShasum)

if err != nil {

log.Fatalln("Invalid signature")

}

log.Println("The signature was correct, updating the binary")

err = updateBinary(binaryToUpdate, remoteBinaryPath)

if err != nil {

log.Fatalln(err)

}

// Either restart the original GUI/CLI or just exit

}

// getBinarySignature The publisher has a signature.bin file that contains the encrypted checksum

func getBinarySignature(remoteBinaryPathDirectory string) ([]byte, error) {

encryptedShasumPath := path.Join(remoteBinaryPathDirectory, "signature.bin")

remoteEncryptedShasum, err := os.ReadFile(encryptedShasumPath)

if err != nil {

return nil, err

}

return remoteEncryptedShasum, nil

}

// getPublishersPublicKey reads the publishers public key. This public key could be stored in some store somewhere.

func getPublishersPublicKey() (*rsa.PublicKey, error) {

currentExecutableRootPath := path.Dir(os.Args[0])

publicKeyPath := path.Join(currentExecutableRootPath, "public.pub")

publicKey, err := readPublicKey(publicKeyPath)

if err != nil {

return nil, err

}

return publicKey, nil

}

// updateBinary retrieves the permissions of the original binary, and writes the new binary in its place with the same permissions.

func updateBinary(dst string, src string) error {

dstFileStats, err := os.Stat(dst)

if err != nil {

return err

}

dstFilePermissions := dstFileStats.Mode()

srcContents, err := os.ReadFile(src)

if err != nil {

return err

}

// Creating a backup of the destination file.

unixStamp := time.Now().Unix()

err = os.Rename(dst, fmt.Sprintf("%s.bak_%d", dst, unixStamp))

if err != nil {

return err

}

err = os.WriteFile(dst, srcContents, dstFilePermissions)

return err

}

func readPublicKey(filename string) (*rsa.PublicKey, error) {

keyData, err := os.ReadFile(filename)

if err != nil {

return nil, err

}

block, _ := pem.Decode(keyData)

if block == nil {

return nil, fmt.Errorf("failed to parse PEM block containing the public key")

}

pub, err := x509.ParsePKIXPublicKey(block.Bytes)

if err != nil {

return nil, err

}

rsaPubKey, ok := pub.(*rsa.PublicKey)

if !ok {

return nil, fmt.Errorf("failed to parse RSA public key")

}

return rsaPubKey, nil

}

// verifyPublishersSignature verifies that the signature created by the release publisher is correct.

// The publisher first generates a sha256sum from the file contents and then signs this checksum with their private key, we call this the "encrypted checksum".

// On the "client" side, a sha256sum is generated from the file contents and the encrypted checksum is decrypted with the public key. These checksum are then compared

// and if they're equal the signature is correct. This is the exact method used by CA's that sign certificates.

func verifyPublishersSignature(publicKey *rsa.PublicKey, fileChecksum []byte, signature []byte) error {

err := rsa.VerifyPSS(publicKey, crypto.SHA256, fileChecksum, signature, nil)

if err != nil {

return fmt.Errorf("signature verification failed: %v", err)

}

return nil

}

func generateLocalSha256FromBinary(p string) []byte {

f, err := os.Open(p)

if err != nil {

log.Fatal(err)

}

defer f.Close()

h := sha256.New()

if _, err := io.Copy(h, f); err != nil {

log.Fatal(err)

}

return h.Sum(nil)

}

// gitInPath checks that "git" is installed on the system and assigned to path. For the sake of this blog post, the function checks that git is in the path but if you're using some

// other remote server you don't need to do this. Even if you used git but did not want to set it into your path you could also specify the whole path and use it that way.

func gitInPath() bool {

_, err := exec.LookPath("git")

if errors.Is(err, exec.ErrDot) {

err = nil

}

if err != nil {

return false

}

return true

}

// fetchNewBinaryFromRemote fetches the new binary that is being used to update the current one from a remote server.

// This remote server could be anything but for the sake of example in this blog post it is a git repository.

func fetchNewBinaryFromRemote() string {

if !gitInPath() {

log.Fatalf("Git is not installed on the system")

}

remoteName := "example_binary_for_updater"

binaryRemotePath := fmt.Sprintf("git@github.com:52617365/%s.git", remoteName)

dir, err := os.MkdirTemp("", "")

if err != nil {

log.Fatal(err)

}

defer os.RemoveAll(dir)

fullPathToRemote := filepath.Join(dir, remoteName)

_, err = exec.Command("git", "clone", binaryRemotePath, fullPathToRemote).Output()

if err != nil {

log.Fatalf("Failed to fetch new binary: %v", err)

}

log.Printf("Successfully fetched new binary from remote")

pathToGitFolder := filepath.Join(fullPathToRemote, ".git")

err = os.RemoveAll(pathToGitFolder)

if err != nil {

log.Fatalf("Failed to remove .git folder: %v", err)

}

return fullPathToRemote

}

As you can see in the code we do the following things:

- Client uses a binary and wants updates from a trusted publisher. It expresses this trust by trusting the publishers public key.

- Publisher decides to publish a new release of the binary:

- Creates an SHA-256 sum of the new binary

- Signs sum with their private key

- Stores this signed sum in a file called “signature.bin”

- Client wants to update their binary:

- Client fetches the new binary and its signature from the remote server

- Client generates an SHA-256 sum of the new binary and uses the trusted publishers public key to decrypt the signature

- Client matches the sum of the new binary with the decrypted sum provided by the publisher.

- If sums match, we can be sure that the new release was provided by someone that had access to the private key that corresponds to the public key we trust.

Note: This is not bulletproof because if the publishers private key gets into the wrong hands and a malicious actor gets a hold of it, they could replace the binary with their own and sign it with the hijacked private key.

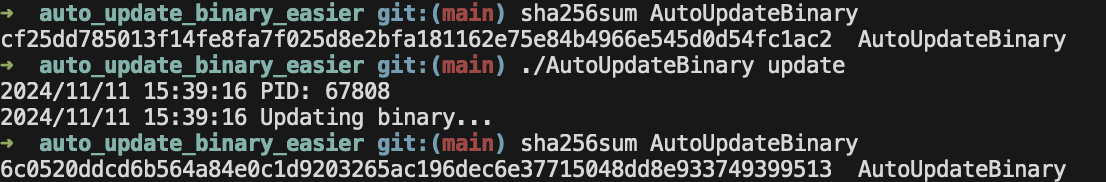

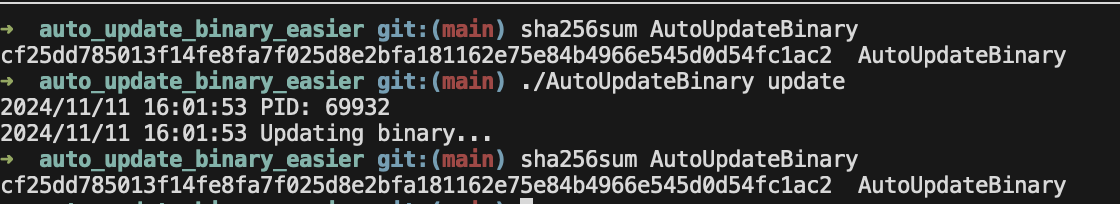

Running the code

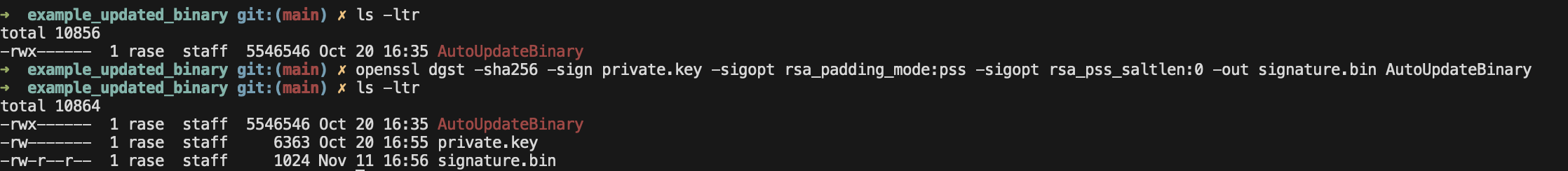

Windows:

Darwin:

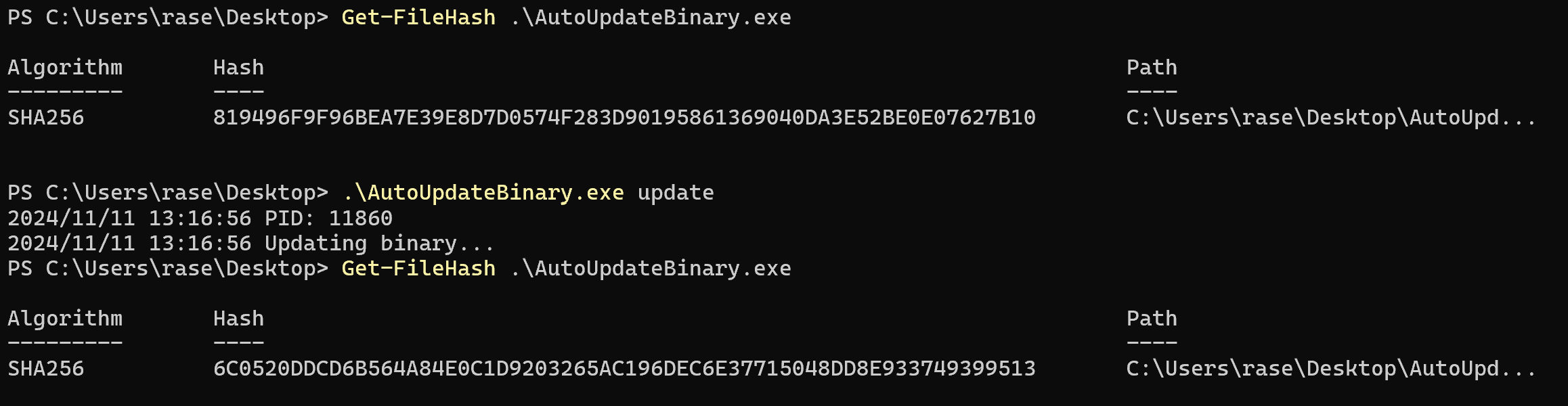

Now, what happens when the authenticity check fails? I manually modified the signature.bin.

Unfortunately the authenticity check error is not displayed in the binary because it has to be closed but the updater could print it out for the user to see! As you can see however is that the checksum of the binary has not changed. This is because the authenticity check failed.

Future improvements

You could (and probably should) add a check to the main binary that checks to see if new updates have been released. You can do this in many ways but one way would be to have the publisher embed the binary version into the checksum that gets signed. This could then be used by the client to compare that version to its own and prompt the user to update. If you want to have an update happen automatically, you could have a daemon running at all times that is always checking the remote server for new updates. If it finds one, it could then update the binary automatically. An alternative way would be to check updates on start up and then signal the daemon on-demand from the main binary to avoid having to poll the remote server at all times on the daemon. This can be tricky to get working cross-platform because Windows does not support all the same signals as Unix. You could also have an API for it but this is all for you to figure out.

Conclusion

Frameworks exist that do this stuff in a more sophisticated way, and I’m not necessarily telling you to use your own implementation in PROD instead of some well maintained and battle-tested framework. The perks of implementing your own should not however be ignored. It gives you the flexibility of crafting something for your use-case only instead of providing a general framework for everyone to use. The more general a framework is the more assumptions it has to make. This leads to slower and less robust code. On top of this, if you create it yourself, you have already audited the code since in a lot of environments you can’t just npm install a new framework into your program.

The right answer obviously depends on the situation but regardless of the right answer I think it’s important to understand the general gist of how it works.

Thank you for reading this post. I haven’t had a lot of time to write blog posts because I’ve been very busy. Thankfully I graduated from school, so I can use that time to work on my projects now.